I am using ros indigo, kinect, freenect.launch, opencv. I actually do not want to use the topic /camera/depth/points to measure the distance, as it is said /camera/depth/image_raw provides depth infromation. I need to what is the minimum distance of any pixel infront of the camera. I would like to pixels of such depth image to be set to the distance with respect to the camera, and of course the far clip plane value if no object present. I can get the image but I do not exactly how can i get depth information. 10 I am trying to write the depth map of a scene in a 16-bit PNG, without any sort of normalization. Depending on your application, you have multiple paths forward: 1.

Zed camera get depth map value python how to#

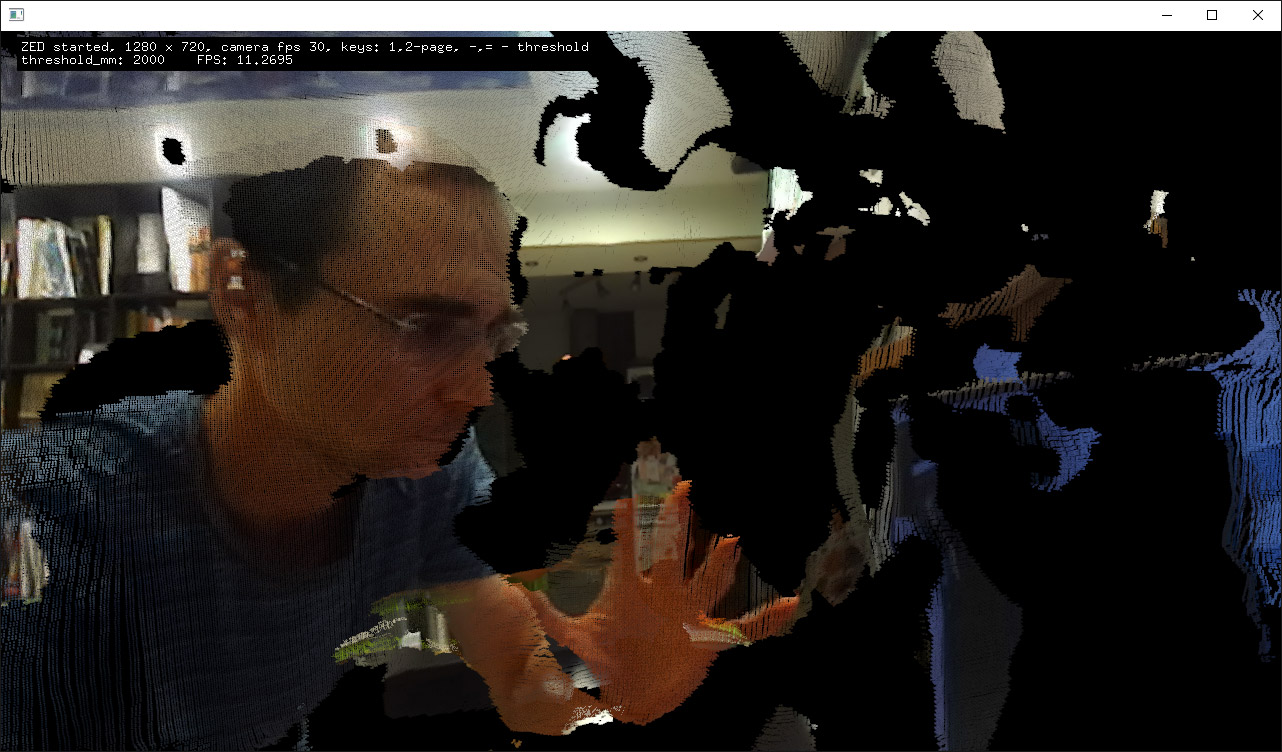

It publish sensor_msgs::image data with depth information. The Isaac codelet wrapping StereoDNN takes a left rectified image, a right rectified image, and both the intrinsic and extrinsic calibration of the stereo camera, and generates a depth frame of size 513x257, using the nvstereonet library from GitHub. At this point, you know how to retrieve image and depth data from ZED stereo cameras. Note: the color depth displayed in the bottom left frame of the ZED Depth Viewer tool is obtained by using the ` retrieveImage` API function ( C++ - Python) of the ZED SDK.I am using the ros topic /camera/depth/image_raw. In order to visualize the objects in the depth map, it is required to open it with an image editor and use a normalizing filter, that will automatically rescale all the values to visible greyscale data. create a depth map and from the camera specification we can get length.

Objects close to the camera, have low depth values and are for this reason dark if the saved image is displayed by using a standard image viewer. choose the appropriate version of opencv corresponding to the python version you. The image obtained by using the SAVE command of ZED Depth Viewer tool is a 16-bit depth map that contains depth values in millimeters in the range 0-65535. I get 2 errors: initial value of reference to non-const must be an lvalue. To correctly display a depth image, instead of performing a normalization of the depth map values, we suggest you exploit instead the ` retrieveImage` API function ( C++ - Python), using the ` VIEW::DEPTH` parameter ( C++ - Python). I used with Zed-Camera for get depth (https.

` max_range` can be the maximum available range value selected when opening the camera, it can be the maximum depth value present in the depth map, or it can be a custom-selected value. To be able to visualize if, for example by using OpenCV, you must normalize the map and rescale it in the grayscale range 0-255 (8-bit image). The distance is expressed in metric units (meters for example) and calculated from the back of the left eye of the camera to the scene object. The depth map obtained with the ` retrieveDepth` API function ( C++ - Python) is a matrix of depth values in 32-bit format, in the measurement units selected when the camera has been opened (default value: meters). In the epipolar geometry & stereo vision article of the Introduction to spatial AI series, we discussed two essential requirements to estimate the depth (the 3D structure) of a given scene: point correspondence and the cameras’ relative position. 'Depth maps captured by the ZED store a distance value (Z) for each pixel (X, Y) in the image.

When you try to visualize on screen a depth map obtained with the `retrieveDepth` API function or saved from the Depth Viewer tool, you usually find it very dark because you are seeing a map and not an image.

0 kommentar(er)

0 kommentar(er)